- Home

- Custom Nodes

- ComfyUI-Frame-Interpolation

ComfyUI-Frame-Interpolation

ComfyUI Frame Interpolation is a custom node set for video frame interpolation in ComfyUI. It has improved memory management, taking less RAM and VRAM. VFI nodes accept scheduling multiplier values. It offers various VFI nodes like GMFSS Fortuna VFI and RIFE VFI. Installation can be done via ComfyUI Manager or command - line. It supports non - CUDA devices experimentally. All VFI nodes are in the 'ComfyUI - Frame - Interpolation/VFI' category and require at least 2 frames. It also provides simple and complex workflow examples and cites relevant research for each VFI node.

Fannovel16

Description

ComfyUI Frame Interpolation (ComfyUI VFI) (WIP)

A custom node set designed for Video Frame Interpolation within ComfyUI.

UPDATE: Memory management has been enhanced. Now, this extension consumes less RAM and VRAM compared to before.

UPDATE 2: VFI nodes now support scheduling multiplier values.

Nodes

- KSampler Gradually Adding More Denoise (efficient)

- GMFSS Fortuna VFI

- IFRNet VFI

- IFUnet VFI

- M2M VFI

- RIFE VFI (4.0 - 4.9) (Note that the

fast_modeoption will have no effect from v4.5+ ascontextnethas been removed) - FILM VFI

- Sepconv VFI

- AMT VFI

- Make Interpolation State List

- STMFNet VFI (requires at least 4 frames and can currently only perform 2x interpolation)

- FLAVR VFI (has the same conditions as STMFNet)

Install

ComfyUI Manager

The incompatibility issue with ComfyUI Manager has now been resolved.

Follow this guide to install this extension: How to use ComfyUI-Manager

Command-line

Windows

Run install.bat.

For Windows users experiencing issues with cupy, please run install.bat instead of install-cupy.py or python install.py.

Linux

Open your shell application and activate the virtual environment if it is used for ComfyUI. Then run:

python install.py

Support for non-CUDA device (experimental)

If you don't have an NVidia card, you can try the taichi ops backend powered by Taichi Lang.

On Windows, you can install it by running install.bat. On Linux, run pip install taichi.

Then, change the value of ops_backend from cupy to taichi in config.yaml.

If a NotImplementedError occurs, it means a VFI node in the workflow is not supported by Taichi.

Usage

If the installation is successful, all VFI nodes can be found in the category ComfyUI-Frame-Interpolation/VFI. These nodes require an IMAGE containing frames (at least 2, or at least 4 for STMF-Net/FLAVR).

Regarding STMFNet and FLAVR, if you only have two or three frames, you should use the following process: Load Images -> Another VFI node (FILM is recommended in this case) with multiplier=4 -> STMFNet VFI/FLAVR VFI.

The clear_cache_after_n_frames option is used to prevent out-of-memory errors. Decreasing this value reduces the risk but also increases the processing time.

It is recommended to use LoadImages (LoadImagesFromDirectory) from ComfyUI-Advanced-ControlNet and ComfyUI-VideoHelperSuite in conjunction with this ComfyUI-Frame-Interpolation extension.

Example

Simple workflow

Workflow metadata is not embedded.

Download these two images anime0.png and anime1.png and place them in a folder such as E:\test as shown in this image.

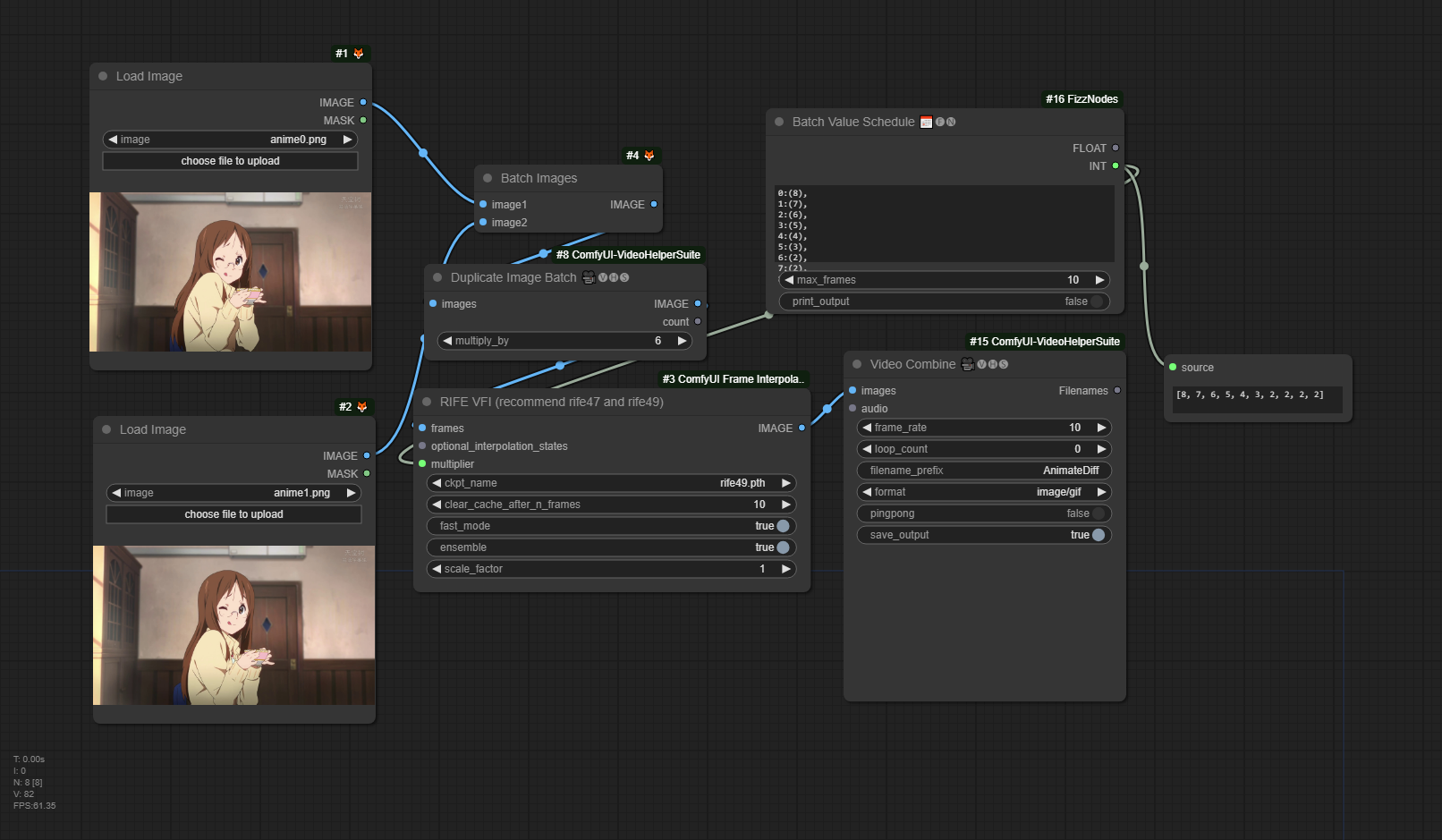

Complex workflow

This workflow is used in AnimationDiff and can load workflow metadata.

Credit

We express our sincere gratitude to styler00dollar for creating VSGAN-tensorrt-docker. Approximately 99% of the code in this ComfyUI-Frame-Interpolation repository is derived from it.

Citation for each VFI node:

GMFSS Fortuna

The All-In-One GMFSS: Dedicated for Anime Video Frame Interpolation GMFSS Fortuna GitHub

IFRNet

@InProceedings{Kong_2022_CVPR,

author = {Kong, Lingtong and Jiang, Boyuan and Luo, Donghao and Chu, Wenqing and Huang, Xiaoming and Tai, Ying and Wang, Chengjie and Yang, Jie},

title = {IFRNet: Intermediate Feature Refine Network for Efficient Frame Interpolation},

booktitle = {Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR)},

year = {2022}

}

IFUnet

RIFE with IFUNet, FusionNet and RefineNet IFUnet GitHub

M2M

@InProceedings{hu2022m2m,

title={Many-to-many Splatting for Efficient Video Frame Interpolation},

author={Hu, Ping and Niklaus, Simon and Sclaroff, Stan and Saenko, Kate},

journal={CVPR},

year={2022}

}

RIFE

@inproceedings{huang2022rife,

title={Real-Time Intermediate Flow Estimation for Video Frame Interpolation},

author={Huang, Zhewei and Zhang, Tianyuan and Heng, Wen and Shi, Boxin and Zhou, Shuchang},

booktitle={Proceedings of the European Conference on Computer Vision (ECCV)},

year={2022}

}

FILM

Frame interpolation in PyTorch

@inproceedings{reda2022film,

title = {FILM: Frame Interpolation for Large Motion},

author = {Fitsum Reda and Janne Kontkanen and Eric Tabellion and Deqing Sun and Caroline Pantofaru and Brian Curless},

booktitle = {European Conference on Computer Vision (ECCV)},

year = {2022}

}

@misc{film-tf,

title = {Tensorflow 2 Implementation of "FILM: Frame Interpolation for Large Motion"},

author = {Fitsum Reda and Janne Kontkanen and Eric Tabellion and Deqing Sun and Caroline Pantofaru and Brian Curless},

year = {2022},

publisher = {GitHub},

journal = {GitHub repository},

howpublished = {\url{https://github.com/google-research/frame-interpolation}}

}

Sepconv

[1] @inproceedings{Niklaus_WACV_2021,

author = {Simon Niklaus and Long Mai and Oliver Wang},

title = {Revisiting Adaptive Convolutions for Video Frame Interpolation},

booktitle = {IEEE Winter Conference on Applications of Computer Vision},

year = {2021}

}

[2] @inproceedings{Niklaus_ICCV_2017,

author = {Simon Niklaus and Long Mai and Feng Liu},

title = {Video Frame Interpolation via Adaptive Separable Convolution},

booktitle = {IEEE International Conference on Computer Vision},

year = {2017}

}

[3] @inproceedings{Niklaus_CVPR_2017,

author = {Simon Niklaus and Long Mai and Feng Liu},

title = {Video Frame Interpolation via Adaptive Convolution},

booktitle = {IEEE Conference on Computer Vision and Pattern Recognition},

year = {2017}

}

AMT

@inproceedings{licvpr23amt,

title={AMT: All-Pairs Multi-Field Transforms for Efficient Frame Interpolation},

author={Li, Zhen and Zhu, Zuo-Liang and Han, Ling-Hao and Hou, Qibin and Guo, Chun-Le and Cheng, Ming-Ming},

booktitle={IEEE Conference on Computer Vision and Pattern Recognition (CVPR)},

year={2023}

}

ST-MFNet

@InProceedings{Danier_2022_CVPR,

author = {Danier, Duolikun and Zhang, Fan and Bull, David},

title = {ST-MFNet: A Spatio-Temporal Multi-Flow Network for Frame Interpolation},

booktitle = {Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR)},

month = {June},

year = {2022},

pages = {3521-3531}

}

FLAVR

@article{kalluri2021flavr,

title={FLAVR: Flow-Agnostic Video Representations for Fast Frame Interpolation},

author={Kalluri, Tarun and Pathak, Deepak and Chandraker, Manmohan and Tran, Du},

booktitle={arxiv},

year={2021}

}